a scientific design

by Jemma Everyhope-Roser

photos by Craig Mahaffey.

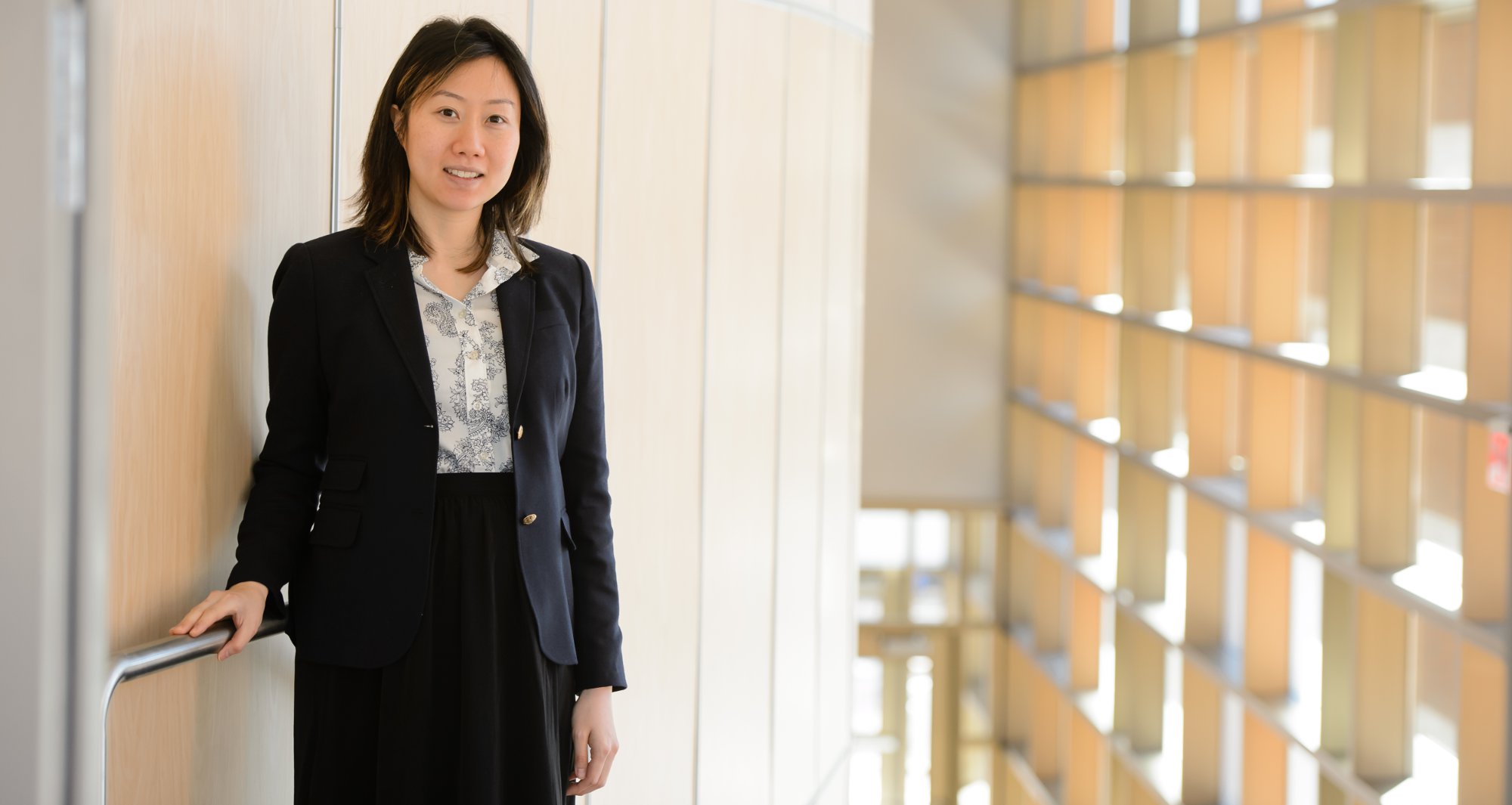

Sara Riggs, an assistant professor in the Department of Industrial Engineering, designs and tests systems in which we can’t trick ourselves.

A video popped up on my Facebook feed. Could I count the number of passes the basketball players wearing white made? I watched, and I counted. And I did not see the man in the gorilla suit march through players, beat his chest, and then stomp onward.

Called a “selective attention task,” this video meme began as a real-world experiment measuring human perceptual limitations. Derren Brown, a well-known British magician, gave a nod to the test in his stage magic show, Enigma, in which he used perceptual limitations to trick his willing audience. I missed the man in the gorilla suit that time too.

Sara Riggs, an assistant professor in the Department of Industrial Engineering, designs and tests systems in which we can’t trick ourselves. Her work in the field of cognitive ergonomics focuses on accounting for human cognitive and perceptual limitations, and developing displays that support people in various work environments.

“There’s a lot we have to take into account,” Riggs explains. “It’s actually very methodical in the way that we go about doing this, because it’s based on science, on findings from previous studies that inform how we go about doing things. We’re pulling from different studies, from multiple disciplines, including, but not limited to, psychology, engineering (e.g., industrial, systems), human-computer interaction, and ethnography.”

But what are the cognitive and perceptual limitations Riggs considers?

Well, for one thing, a human being can only viably process so much perceptual information at one time.

“For data overload, there is a threshold that may vary slightly from one person to another of how much visual information we can attend to at once,” Riggs says, “while also keeping it in working memory so that we can use it later on.”

This can be applied to auditory limitations as well. Over time it becomes easy to “tune out” repetitive sounds so that we can focus on the task at hand. But if the sound we tune out is the beep-beep-beep of a heart monitor, and that beeping flatlines without a doctor or nurse noticing, then that’s a case of a human limitation that could put a patient at risk.

“So we have to have the right attention, and if there’s a change, we have to be able to notice that,” Riggs says. “Based on our ability to perceive the world, we have certain limitations in how we can perceive it.”

Cognitive limitations can be categorized as biases or heuristics. As an example, Riggs cites confirmation bias, where a person starts out with a hypothesis and searches the environment for clues to support it. Confirmation bias exists because it has benefits. It’s a shortcut that speeds up the deduction process. But, Riggs says, “If you’re going in the wrong direction, this will work against you.

“That’s usually the purpose of these heuristics and biasesin terms of how we view the world,” Riggs continues. “Rather than processing all of the information and stimuli around us, we become more efficient. So this is something we have to take into consideration when we design displays, especially in terms of information and how information is presented: How do we account for these biases, these limitations, these heuristics, in terms of how people are perceiving the world around them?” Basically, where a stage magician will use our perceptions and cognitive limitations against us to create the illusion of magic, Riggs uses scientific data to flip that paradigm—so that we can make our limitations work for us.

Riggs (left) works with students on a prototype of a vest that could help anesthesiologists save lives. The vest conveys information through the tactile channel, enabling quicker response times.

What do Boeing pilots, anesthesiologists, and military personnel have in common?

In high-stress environments, where a lapse puts lives at risk, how can we best present information so that the right decision is made at the right time? As technology multiplies and data maxes us out, designers must confront that question for the Boeing pilots, anesthesiologists, and military personnel of the future. Current state-of-the-art displays are visual and auditory, and an operating room, with its many beeping machines, screens, and readouts with zigzagging lines, is no exception. But a visual display must be watched. And an auditory alert affects everyone within hearing range.

Riggs proposes what might be an answer: a tactile display. With a tactile display, Riggs says, “You can create more or less private displays that don’t disrupt everyone.”

Having reviewed the literature, Riggs has developed a multidisciplinary team of computer scientists and industrial engineers to help the head of anesthesiology at the Medical University of South Carolina devise the future of anesthesiology. “What we’re planning on testing, just to see if it works,” Riggs says, “is a vest where we place a lot of these tactor devices so that a potential anesthesiologist in the future can wear it and get their information via the tactile channel.”

The tactors, similar to the technology that makes a cell phone buzz, are slightly larger than those in a mobile device, but they’re designed so that Riggs can manipulate more of their parameters. The tactors can provide information with a combination of three methods: amplitude, frequency, and pulse pattern.

“Just by manipulating those parameters, you can convey a lot of different information if you assign them appropriately,” Riggs says.

The vest itself would have similar parameters set for everyone—rather than every anesthesiologist being able to custom design their own—so that anesthesiologists could move from vest to vest with greater ease. The personalization would occur in an adjustable strength of stimuli, so that people of differing body types and sensitivities would be able to set the vest so that it buzzes at a comfortable level. Riggs says, “We’re trying to find a nice medium of how to individualize this but keep it consistent so that if we go from one operating room to another there isn’t confusion as to ‘what do these tactile stimuli mean?’”

When asked about the prototype’s first trial, Riggs admits, “I’m kind of a guinea pig in terms of when my students are testing these things. I’m pretty active in the lab.”

In order to test the vest, Riggs and her students will create a mock-up operating room, bring in participants, train them on the task, and then ask them to perform that task (a) with the current state of technology vs. (b) with the new technology they’re testing. Participants, whether they’re pilots or lit majors, have similar perceptual and cognitive limitations, so Riggs intends to start them out with doing a simplified version of what an anesthesiologist would do before bringing an actual anesthesiologist. Once they get to that stage, Riggs will be able to present a very polished product.

Right now, Riggs nears the end of a different study. She’s working on displays for military personnel to control unmanned aerial vehicles (UAVs). Currently it takes three people to monitor one UAV: They watch its status, reroute as necessary, and respond to chat messages. But the military’s grand vision is to have one person monitoring up to nine UAVs.

“If you want to talk about data overload, that’s a prime example,” Riggs says. “So the challenge is how we can best support the operators so that they are best able to do that.”

In Riggs’s UAV experiment, one person tracks the health status and chat messages, and reroutes sixteen UAVs. “So it’s a lot of tasks,” Riggs says, which to me sounds like an understatement. But, she says, skilled gamers have one up on the rest of us and find this level of multitasking relatively straightforward.

Working under the assumption that most people must look at a display to engage with it, Riggs uses an eye tracker to determine how people juggle these UAVs. The eye tracker not only collects data on where people look and for how long, but it can also provide insights on participant accuracy and response times. After the participants have taken part, Riggs debriefs them to discuss what their strategy was when managing these sixteen UAVs. Soon Riggs will be analyzing the data and the participant feedback to understand how her proposed design worked.

Previously, Riggs worked with Boeing pilots on designing next-generation cockpits. Current technology is still radar-based, and the industry would prefer to shift that to satellite-based information that can show real-time changes regarding weather data, for example. Riggs found tactile displays could convey information—though less information than visual or auditory displays, which can convey language, because there is no tactile language. Yet most importantly, she also discovered that, “the response times in the tactile channel can trump that to auditory and visual.”

In high-stress environments, when a split-second decision determines life or death, that could be a critical finding indeed. Work continues in determining which sensory channel should be used to convey what information. Riggs will be “using a sense of touch to augment visual and auditory displays, which are state of the art for most environments now, and honing in on the benefits of a sense of touch to convey different types of information in the most appropriate manner.”

This tactor, similar to the technology that makes a cell phone buzz, is designed so that Riggs can manipulate parameters like amplitude, frequency, and pulse pattern.

Bridging the boundaries between scientific disciplines, man, and machine

“The main part of being an engineer is fixing a problem,” Riggs says. But these days, the multifaceted problems of our increasingly technology-dependent culture aren’t something that one person can tackle alone. They require a team from multiple disciplines and across many agencies.

Riggs seeks “collaborations with external funding agencies outside the university.” Her anesthesiology project works in combination with the Medical University of South Carolina. Her UAV project receives military funding. NASA and the FAA actively look for academic partners to work with them on the future of aviation.

Then there are the teams that Riggs puts together. The anesthesiology project, for example, draws on the expertise of computer scientists, industrial engineers, and medical professionals. As Riggs says, each experiment is “a combination of science and technology.”

The experiments themselves draw on previous studies in multiple disciplines. Controlling for variables in each experiment requires a regimented process and methodical procedures, so that Riggs can be sure they’re testing the variable they wish to test.

“Getting all of the pieces to come together can be a hurdle,” Riggs says. “But I feel at the end of the day that’s very rewarding.”

What Riggs is searching for from all of these experiments is information that’s applicable to more than one domain. Whether it’s telemedicine in the ICU environment or monitoring UAVs, Riggs wants to extract principles.

As technology becomes more and more complex, more scientists like Riggs will be working to determine the best interfaces to bridge the gap between man and machine. Riggs says that her experiments give her “hope that my work will solve the lingering questions that will need to be addressed in a future with the advent of new technology.”

Whether it’s in the operating room or in a cockpit, it’s guaranteed our future selves will be processing and picking out patterns from more data than ever before. With personalized signals sent to each individual, we’ll respond faster than ever to real-time, changing situations. It’ll be so intuitive, so easy, it’ll look like sleight of hand—but only because it’ll be designed by science to take advantage of human limitations.